During a conversation, data is communicated across one of two types of channels that represent the concepts of calland media flow.

Two-Party Communication

Two-Party Communication

The Conversationclass is a container for multiparty communication in Microsoft Unified Communications Managed API 2.0 Core SDK. The properties and methods of this class provide access to many of the other building blocks for communication.

Calls

The call represents a signaling session, based on Session Initiation Protocol (SIP), and provides support for a media session, based on Session Description Protocol (SDP), on top of the signaling session. All call control signals are delivered through the call control channel, which is also known as the signaling channel. A conversation can involve multiple call modalities; however, each modality is associated with only one call in a conversation. For example, a local participant can be engaged with a remote participant in a two-party conversation in which they use both audio and instant messaging. In this example, the conversation will maintain a single call control channel for audio and a single call control channel for instant messaging.

Flows

A flow (or media flow) represents the audio or instant messaging (IM) data that participants send to each other during a conversation. A media flow is delivered through a media-specific channel. For example, IM media is delivered through an IM channel, while audio media is delivered through an audio channel.

After a call is established, media flows are created and managed by media providers. The UCMA 2.0 Core SDK natively supports IM and audio/video providers, and also enables applications to supply their own media providers. A media provider can support one or more media types. The media provider exposes its media flow to the application by way of the call, and the application can configure the media flow using flow settings. The media providers themselves are not exposed to the application nor is there a need for an application to interact with them directly. The conversation connects the call with the appropriate provider depending on the media types supported by the call. For example, an IM conversation will connect to the InstantMessagingProvider, which will create and manage an InstantMessagingFlow. The media provider for a conversation must support all of the media types that are supported by the call.

The UCMA 2.0 Core SDK provides support for a group of devices (recorder, player, tone controller, speech recognition, and speech synthesis) through its audio/video flow. For more information, see Audio Devices in UCMA 2.0 Core.

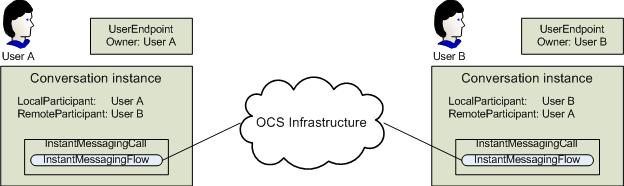

Two-Party Conversation—Unimodal

To begin a two-party conversation, an application must establish a call between the local participant and a remote participant. For a unimodal conversation, an AudioVideoCallinstance or an InstantMessagingCallinstance performs call control operations. A media flow type that matches the call type, either AudioVideoFlowor InstantMessagingFlow, performs media control operations. The media flow instance can be configured by the use of the applicable template, either AudioVideoFlowTemplateor InstantMessagingFlowTemplate.

The following illustration depicts a two-party conversation using a single media modality: IM. This and other figures in this topic should be considered high-level representations, and as such, are somewhat simplified. Each conversation participant has a UserEndpointassociated with it, although a Conversationinstance can be associated with more than one endpoint.

Each conversation participant has a Conversationinstance on his or her computer or device. For each Conversationinstance LocalParticipantrepresents the user to which this Conversationinstance belongs. Similarly, the Remote Participant (the actual property on Conversationis a collection named RemoteParticipants) represents the other participant in the conversation. Given that this conversation involves IM, each Conversationinstance has an InstantMessagingCall, which in turn has an InstantMessagingFlow. The text of the instant messages flows from one Conversationinstance to the other through the Microsoft Office Communications Server infrastructure. Signaling information (the path for this is not shown) passes from one InstantMessagingCallinstance to the other, by way of the Office Communications Server infrastructure.

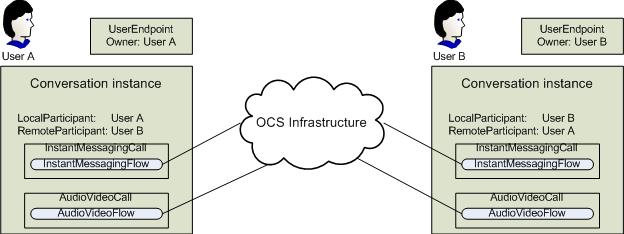

Two-Party Conversation—Multimodal

The second illustration depicts a two-party conversation using two media modalities: IM and audio/video. The only difference between this illustration and the previous one is that now each Conversationinstance also has an AudioVideoCall, each of which has an AudioVideoFlow. The IM portion of the conversation media flows through the InstantMessagingFlowinstances, while the audio and video flow through the AudioVideoFlowinstances. As in the previous illustration, IM data is passed between the InstantMessagingFlowinstances by way of the Office Communications Server infrastructure. Audio/video data is passed between the AudioVideoFlowinstances, also by way of the Office Communications Server infrastructure. Signaling information passes from one InstantMessagingCallinstance to the other, by way of the Office Communications Server infrastructure.

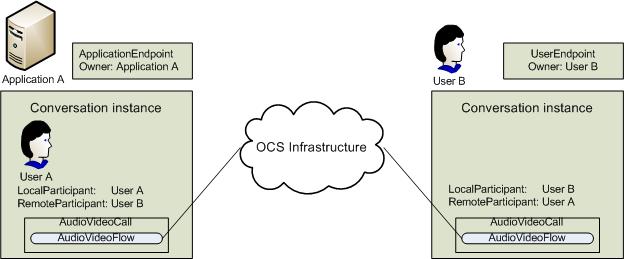

Two-Party Conversation with Impersonation

The third illustration depicts a two-party conversation using audio, but in this illustration an application (Application A) impersonates a user (User A). Unlike the two previous scenarios, the endpoint owner and the local participant of the associated conversation instance are different. Another difference between this scenario and the two previous ones is that the endpoint for Application A must be an ApplicationEndpoint, rather than a UserEndpoint.

Multiparty Communication

Multiparty Communication

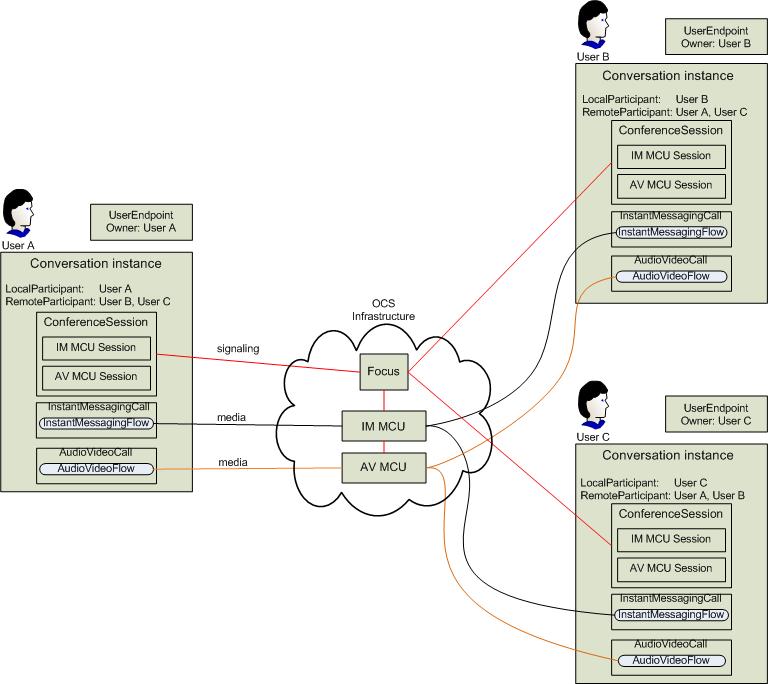

When a conversation involves three or more participants (the local participant and two or more remote participants), the conversation must be escalated to a conference. Depending on the media mode or modes in use, one or more MCUs become involved. Communication between the local and remote participants is mediated by a modality-specific multipoint control unit (MCU). An audio/video MCU mediates audio and video in a conversation, and an IM MCU mediates IM messages in a conversation.

Unimodal MCU Session

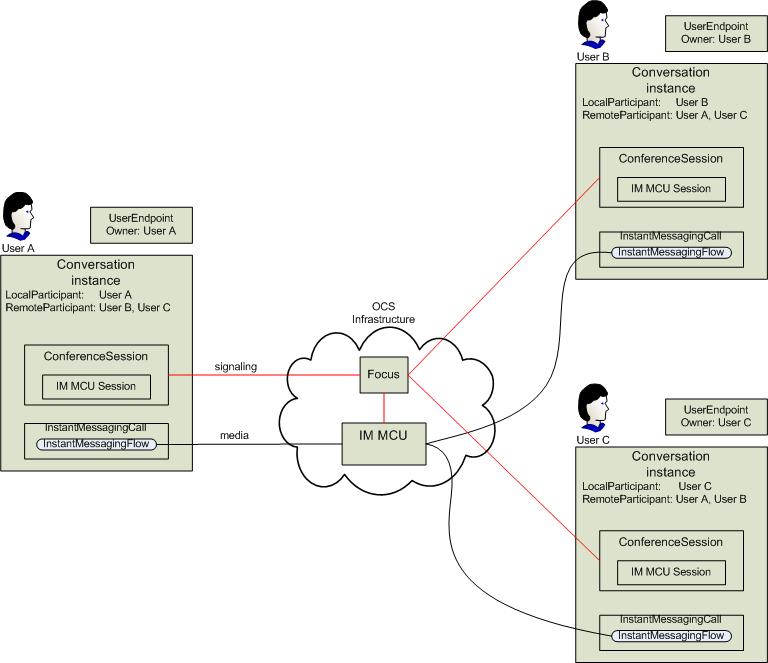

The next illustration depicts a unimodal IM conversation that has been escalated to a conference. The significant differences between this conference and the two-party conversations shown earlier:

- A

ConferenceSessionappears in each conversation instance.

- An IM MCU Session instance (the actual class name is

InstantMessagingMcuSession) appears in each

ConferenceSession.

- The Focus and IM MCU now play roles in the Office

Communications Server infrastructure.

As the next illustration shows, signaling information passes from a given IM MCU Session to the Focus, and then to the IM MCU Sessions on the conversation instances of the other participants. Signaling information also passes from the Focus to the IM MCU. IM information (identified as media in the illustration) passes from the InstantMessagingFlowin one conversation instance to the IM MCU, and then to the InstantMessagingFlowof each other participant.

Multimodal MCU Session

The last illustration in this topic depicts a multimodal conference. The most significant differences between this type of conference and the unimodal conference shown in the previous illustration:

- Each

ConferenceSessioninstance now has an AV MCU Session instance

(the actual name of this class is

AudioVideoMcuSession). Each media modality requires its own

MCU Session to handle call control.

- Each

ConferenceSessioninstance now has an

AudioVideoCallinstance (with its associated

AudioVideoFlow).

- An additional MCU is shown in the Office Communications Server

infrastructure.

The channels for signaling and media are similar to, but slightly more complex than those for a unimodal conference. In a given conversation instance, the MCU Sessions in ConferenceSessionsend signaling information to the Focus and to their corresponding MCUs, and the Focus passes this information on to the MCU Sessions in the other conference participants. IM and audio/video information travels from an InstantMessagingFlowor AudioVideoFlowon one Conversationinstance to the appropriate MCU. From there, this information passes to the InstantMessagingFlowor AudioVideoFlowon the other Conversationinstances.